Table of Contents

Understanding the Importance of Data Warehousing

In today’s digital age, data has become the lifeblood of businesses across all industries. The ability to gather, store, and analyze vast amounts of data has transformed the way organizations operate and make decisions. Amidst this data deluge, data warehousing has emerged as a critical component in managing and leveraging data effectively. Understanding the importance of data warehousing is paramount for businesses striving to stay competitive in an increasingly data-driven world.

What is Data Warehousing?

At its core, a data warehouse is a centralized repository that stores integrated data from disparate sources within an organization. Unlike operational databases that are designed for transactional processing, data warehouses are optimized for analytical queries and reporting. By consolidating data from various operational systems such as sales, marketing, finance, and more, data warehouses provide a unified view of an organization’s information, facilitating informed decision-making.

Importance of Data Warehousing:

- Data Integration and Consolidation: One of the primary benefits of data warehousing is its ability to integrate data from multiple sources. In today’s complex business environment, organizations often operate with diverse systems and databases. A data warehouse acts as a central hub, harmonizing disparate data into a consistent format. This integrated view enables stakeholders to analyze information across departments and gain valuable insights.

- Improved Data Quality and Consistency: Data inconsistencies and discrepancies are common challenges faced by organizations dealing with multiple data sources. Data warehousing addresses these issues by implementing processes for data cleansing, transformation, and standardization. By maintaining a single source of truth, data warehouses ensure data integrity and accuracy, thereby enhancing decision-making reliability.

- Enhanced Business Intelligence (BI): Data warehouses serve as the foundation for robust business intelligence initiatives. By storing historical and current data in a structured format, organizations can perform complex analytics, generate meaningful reports, and derive actionable insights. BI tools integrated with data warehouses empower users to visualize trends, identify patterns, and make data-driven decisions with confidence.

- Scalability and Performance: As data volumes continue to grow exponentially, scalability and performance become critical considerations. Data warehouses are designed to handle large datasets efficiently, offering scalability to accommodate increasing data volumes without compromising performance. With optimized query processing and indexing strategies, data warehouses ensure timely access to information, enabling agile decision-making.

- Support for Strategic Initiatives: In today’s competitive landscape, agility and responsiveness are essential for staying ahead. Data warehouses play a pivotal role in supporting strategic initiatives such as predictive analytics, data mining, and machine learning. By leveraging historical data stored in the warehouse, organizations can uncover valuable insights, anticipate market trends, and proactively adapt their strategies.

- Regulatory Compliance and Governance: Compliance with regulatory requirements such as GDPR, HIPAA, or SOX is a top priority for organizations handling sensitive data. Data warehouses facilitate compliance efforts by providing robust security features, access controls, and audit trails. By adhering to data governance best practices, organizations can ensure data privacy, integrity, and regulatory compliance.

Defining the Goals and Objectives of Your Enterprise Data Warehouse

In today’s digital age, data has become the lifeblood of businesses across all industries. The ability to gather, store, and analyze vast amounts of data has transformed the way organizations operate and make decisions. Amidst this data deluge, data warehousing has emerged as a critical component in managing and leveraging data effectively. Understanding the importance of data warehousing is paramount for businesses striving to stay competitive in an increasingly data-driven world.

What is Data Warehousing?

At its core, a data warehouse is a centralized repository that stores integrated data from disparate sources within an organization. Unlike operational databases that are designed for transactional processing, data warehouses are optimized for analytical queries and reporting. By consolidating data from various operational systems such as sales, marketing, finance, and more, data warehouses provide a unified view of an organization’s information, facilitating informed decision-making.

Importance of Data Warehousing:

- Data Integration and Consolidation: One of the primary benefits of data warehousing is its ability to integrate data from multiple sources. In today’s complex business environment, organizations often operate with diverse systems and databases. A data warehouse acts as a central hub, harmonizing disparate data into a consistent format. This integrated view enables stakeholders to analyze information across departments and gain valuable insights.

- Improved Data Quality and Consistency: Data inconsistencies and discrepancies are common challenges faced by organizations dealing with multiple data sources. Data warehousing addresses these issues by implementing processes for data cleansing, transformation, and standardization. By maintaining a single source of truth, data warehouses ensure data integrity and accuracy, thereby enhancing decision-making reliability.

- Enhanced Business Intelligence (BI): Data warehouses serve as the foundation for robust business intelligence initiatives. By storing historical and current data in a structured format, organizations can perform complex analytics, generate meaningful reports, and derive actionable insights. BI tools integrated with data warehouses empower users to visualize trends, identify patterns, and make data-driven decisions with confidence.

- Scalability and Performance: As data volumes continue to grow exponentially, scalability and performance become critical considerations. Data warehouses are designed to handle large datasets efficiently, offering scalability to accommodate increasing data volumes without compromising performance. With optimized query processing and indexing strategies, data warehouses ensure timely access to information, enabling agile decision-making.

- Support for Strategic Initiatives: In today’s competitive landscape, agility and responsiveness are essential for staying ahead. Data warehouses play a pivotal role in supporting strategic initiatives such as predictive analytics, data mining, and machine learning. By leveraging historical data stored in the warehouse, organizations can uncover valuable insights, anticipate market trends, and proactively adapt their strategies.

- Regulatory Compliance and Governance: Compliance with regulatory requirements such as GDPR, HIPAA, or SOX is a top priority for organizations handling sensitive data. Data warehouses facilitate compliance efforts by providing robust security features, access controls, and audit trails. By adhering to data governance best practices, organizations can ensure data privacy, integrity, and regulatory compliance.

Assessing Current Data Infrastructure and Needs

In today’s digital age, data reigns supreme. It’s the backbone of decision-making, the fuel for innovation, and the lifeblood of businesses across industries. But as the volume, velocity, and variety of data continue to surge, it’s imperative for organizations to periodically assess their data infrastructure and anticipate future needs to stay ahead of the curve.

Understanding Current Data Infrastructure:

Before delving into future needs, a thorough understanding of the present data infrastructure is crucial. This involves scrutinizing the existing systems, processes, and technologies that support data collection, storage, analysis, and dissemination.

- Data Sources: Identify all the sources generating data within the organization. This could range from customer interactions and sales transactions to IoT devices and social media feeds.

- Storage Mechanisms: Evaluate how data is stored – whether it’s on-premises servers, cloud platforms, or a hybrid model. Assess the scalability, security, and accessibility of these storage solutions.

- Data Processing Tools: Analyze the tools and technologies used for data processing and analytics. Are they capable of handling the current workload efficiently? Are there any bottlenecks or performance issues?

- Data Governance and Compliance: Review the policies and procedures governing data usage, privacy, and compliance with regulations like GDPR or CCPA. Ensure that data management practices align with industry standards and legal requirements.

Anticipating Future Needs:

Once the current data infrastructure is comprehensively evaluated, it’s time to turn our attention to the future. Anticipating the evolving needs of the organization and the broader industry landscape is essential for staying competitive and innovative.

- Scalability: As data volumes continue to grow exponentially, scalability becomes paramount. Evaluate whether the existing infrastructure can seamlessly accommodate this growth or if upgrades are necessary to prevent performance degradation.

- Real-Time Analytics: With the increasing demand for real-time insights, assess whether the current data processing tools can deliver instantaneous analytics. Explore technologies like stream processing and in-memory databases to enable faster decision-making.

- AI and Machine Learning: Embrace the potential of AI and machine learning to derive actionable insights from data. Invest in algorithms and models that can automate tasks, uncover hidden patterns, and enhance predictive capabilities.

- Data Security: In an era plagued by cyber threats and data breaches, bolstering data security measures is non-negotiable. Consider implementing advanced encryption techniques, robust access controls, and continuous monitoring to safeguard sensitive information.

- Data Democratization: Empower employees across the organization to access and leverage data effectively. Invest in self-service analytics platforms and intuitive visualization tools to democratize data and foster a culture of data-driven decision-making.

Establishing Data Governance and Quality Standards

In today’s data-driven world, where information flows ceaselessly and organizations thrive on data-driven decision-making, the need for robust data governance and quality standards cannot be overstated. Data is often hailed as the new oil, but like oil, it needs refining to extract its true value. Establishing effective data governance and quality standards is akin to building a sturdy refinery, ensuring that the data fueling your organization is clean, reliable, and consistent. Let’s delve into the intricacies of this crucial process.

Understanding Data Governance:

Data governance encompasses the strategies, processes, and policies that ensure data is managed effectively throughout its lifecycle. It establishes accountability, defines roles and responsibilities, and sets guidelines for data usage, storage, and security. At its core, data governance aims to align data initiatives with business objectives while mitigating risks and ensuring compliance with regulations such as GDPR, HIPAA, or CCPA.

Why Quality Standards Matter:

Data quality directly impacts the reliability and effectiveness of business operations. Poor-quality data leads to erroneous insights, flawed decision-making, and ultimately, loss of revenue and reputation. Quality standards encompass data accuracy, completeness, consistency, timeliness, and relevance. By adhering to these standards, organizations can enhance trust in their data, drive operational efficiency, and gain a competitive edge.

Key Steps in Establishing Data Governance and Quality Standards:

- Define Objectives and Scope: Start by outlining clear objectives for your data governance initiative. Identify the scope of data governance, including the types of data to be governed and the stakeholders involved.

- Assess Current State: Conduct a thorough assessment of your organization’s current data landscape. Identify existing data governance practices, data sources, quality issues, and gaps in processes.

- Establish Governance Framework: Develop a governance framework tailored to your organization’s needs. Define data policies, standards, and procedures, along with roles and responsibilities for data stewards, custodians, and users.

- Implement Data Quality Measures: Deploy tools and technologies to monitor and improve data quality continuously. Implement data profiling, cleansing, and validation processes to ensure data accuracy and consistency.

- Ensure Compliance: Stay abreast of regulatory requirements and industry standards pertaining to data governance and quality. Implement mechanisms to ensure compliance and mitigate risks associated with data privacy and security.

- Educate and Train: Invest in training programs to educate employees about the importance of data governance and quality. Foster a culture of data stewardship and accountability across the organization.

- Monitor and Iterate: Establish metrics and KPIs to measure the effectiveness of your data governance and quality efforts. Continuously monitor data quality, solicit feedback from stakeholders, and iterate on your governance framework to adapt to evolving business needs.

Benefits of Effective Data Governance and Quality Standards:

- Enhanced Decision-Making: Reliable and high-quality data enables informed decision-making at all levels of the organization, leading to better outcomes and competitive advantage.

- Improved Operational Efficiency: Streamlined data processes and standardized practices reduce redundancy, errors, and inefficiencies, driving operational excellence.

- Increased Trust and Credibility: By demonstrating a commitment to data governance and quality, organizations foster trust among customers, partners, and regulatory bodies, enhancing their reputation and credibility.

- Risk Mitigation: Proactive management of data governance and quality helps mitigate risks associated with data breaches, compliance violations, and reputational damage.

Selecting the Right Technology Stack for Your Data Warehouse

In today’s data-driven world, businesses rely heavily on data warehouses to store, manage, and analyze vast amounts of information. However, with the plethora of technologies available, selecting the right technology stack for your data warehouse can be a daunting task. A well-thought-out technology stack is crucial for ensuring optimal performance, scalability, and cost-effectiveness. In this article, we’ll delve into the key considerations to keep in mind when choosing the ideal technology stack for your data warehouse.

- Understand Your Requirements: Before diving into the selection process, it’s essential to understand your business requirements, data volumes, types of data, and expected workload. Are you dealing with structured or unstructured data? What are your performance and scalability requirements? Answering these questions will help you narrow down your options.

- Scalability and Performance: Scalability is a critical factor, especially as your business grows and your data volumes increase. Your technology stack should be able to handle growing workloads without compromising performance. Consider technologies that offer horizontal scalability, such as cloud-based solutions like Amazon Redshift, Google BigQuery, or Snowflake.

- Data Integration and ETL: Smooth integration with existing systems and seamless Extract, Transform, Load (ETL) processes are vital for efficient data warehousing. Look for technologies that offer robust ETL capabilities or integrate well with popular ETL tools like Apache NiFi, Talend, or Informatica.

- Data Storage and Processing: The choice between traditional relational databases (RDBMS) and NoSQL databases depends on your data structure, complexity, and querying needs. RDBMS like PostgreSQL or MySQL are suitable for structured data with complex relationships, while NoSQL databases like MongoDB or Cassandra excel in handling unstructured data and offering high scalability.

- Analytical Capabilities: Advanced analytical capabilities such as data visualization, predictive analytics, and machine learning are becoming increasingly important for deriving actionable insights from your data. Consider platforms that offer built-in analytics tools or seamless integration with popular analytics platforms like Tableau, Power BI, or Looker.

- Cost Considerations: Cost is a significant factor in choosing a technology stack for your data warehouse. Cloud-based solutions offer the advantage of pay-as-you-go pricing models, eliminating the need for upfront infrastructure investment. However, be mindful of hidden costs such as data transfer fees, storage costs, and compute charges.

- Security and Compliance: Data security and regulatory compliance should never be overlooked. Ensure that the technology stack you choose provides robust security features such as encryption, access controls, and compliance certifications (e.g., GDPR, HIPAA).

- Community and Support: Opt for technologies with active communities and strong vendor support. A vibrant community ensures access to a wealth of resources, including documentation, tutorials, and community forums, which can be invaluable for troubleshooting issues and sharing best practices.

Designing an Efficient Data Model and Schema

In today’s digital landscape, where data reigns supreme, designing an efficient data model and schema is paramount for businesses aiming to thrive in a data-driven world. A well-structured data model forms the foundation upon which databases are built, facilitating seamless data management, retrieval, and analysis. In this article, we delve into the intricacies of designing an efficient data model and schema, exploring key principles, strategies, and best practices.

Understanding the Basics

Before diving into the intricacies of data modeling, it’s crucial to grasp the fundamentals. A data model serves as a blueprint that defines the structure, relationships, and constraints of data within a database. It embodies the logical representation of data entities, attributes, and their interconnections. On the other hand, a schema specifies the organization of data elements and their relationships, providing a framework for database implementation.

Key Principles of Data Modeling

1. Data Normalization: Normalization is the process of organizing data to minimize redundancy and dependency, thereby enhancing data integrity and efficiency. By breaking down data into smaller, manageable units and organizing them into separate tables based on their attributes, normalization reduces data redundancy and improves data consistency.

2. Denormalization: While normalization focuses on minimizing redundancy, denormalization involves strategically reintroducing redundancy to optimize query performance. By storing redundant data in some cases, denormalization reduces the need for complex joins and enhances query execution speed, particularly in read-heavy applications.

3. Entity-Relationship Modeling: Entity-Relationship (ER) modeling is a technique used to visualize and design the relationships between different entities within a database. It employs entities to represent real-world objects, attributes to describe properties, and relationships to depict connections between entities. ER diagrams provide a graphical representation of the database structure, aiding in the conceptualization and communication of complex data models.

Strategies for Efficient Schema Design

1. Understand Data Requirements: Begin by comprehensively understanding the data requirements and business objectives. Collaborate with stakeholders to identify key entities, attributes, and relationships, ensuring that the data model aligns with organizational goals and supports future scalability.

2. Prioritize Performance: Optimize schema design for performance by considering factors such as data access patterns, query complexity, and indexing requirements. Employ techniques like vertical and horizontal partitioning, indexing, and materialized views to enhance query execution speed and resource utilization.

3. Flexibility and Scalability: Design the schema with flexibility and scalability in mind to accommodate evolving business needs and increasing data volumes. Embrace modular design principles, such as microservices architecture and NoSQL databases, to facilitate agile development and seamless scalability.

Best Practices:

- Maintain Consistency: Ensure consistency across the data model and schema to avoid data anomalies and discrepancies.

- Document Extensively: Document the data model and schema comprehensively, including entity definitions, attribute descriptions, and relationship constraints, to aid in understanding and maintenance.

- Iterate and Refine: Treat data modeling as an iterative process, continuously refining the schema based on feedback, evolving requirements, and performance optimization.

Integrating Data from Various Sources

In today’s data-driven landscape, businesses thrive on the ability to gather insights from diverse sources of information. However, the real challenge lies in integrating this data seamlessly to extract meaningful insights and drive informed decision-making. Integrating data from various sources is not merely a technical feat but a strategic imperative for organizations looking to stay competitive in their respective industries.

Understanding Data Integration:

Data integration refers to the process of combining data from different sources into a unified view, often involving transforming the data to ensure consistency and compatibility. This encompasses a wide array of sources, including structured databases, unstructured data like social media feeds, and semi-structured data such as XML files.

Challenges Faced:

- Data Silos: One of the primary challenges is the existence of data silos within organizations. Departments often maintain their databases or use disparate systems, resulting in isolated pockets of data that hinder cross-functional analysis.

- Data Quality: Ensuring the quality and accuracy of data is crucial for meaningful integration. Inconsistent formats, incomplete records, and duplicate entries can skew insights and lead to erroneous conclusions.

- Compatibility Issues: Different data sources may use varying formats, standards, or protocols, making it challenging to integrate them seamlessly. This necessitates the use of compatible tools and technologies for effective data harmonization.

- Scalability: As data volumes continue to grow exponentially, scalability becomes a pressing concern. Organizations must design integration solutions capable of handling large datasets efficiently without sacrificing performance.

Best Practices for Integration:

- Define Clear Objectives: Begin by outlining your integration goals and the insights you aim to derive. This ensures alignment with business objectives and guides the integration process.

- Invest in Robust Infrastructure: Implementing a reliable infrastructure is essential for seamless data integration. This may involve deploying cloud-based solutions, data lakes, or integration platforms equipped to handle diverse data types.

- Standardize Data Formats: Establish standardized formats and schemas to promote consistency across different data sources. This facilitates easier mapping and transformation during the integration process.

- Implement Data Governance: Adopt robust data governance practices to maintain data integrity, security, and compliance throughout the integration lifecycle. This includes defining roles, access controls, and data stewardship protocols.

- Utilize ETL/ELT Tools: Leverage Extract, Transform, Load (ETL) or Extract, Load, Transform (ELT) tools to automate data ingestion, transformation, and loading processes. These tools streamline integration workflows and enhance efficiency.

- Embrace Real-time Integration: In today’s fast-paced environment, real-time data integration is becoming increasingly vital. Explore technologies like Change Data Capture (CDC) and event-driven architectures to enable real-time insights and decision-making.

Benefits of Effective Data Integration:

- 360-Degree View of Operations: Integrating data from various sources provides organizations with a comprehensive view of their operations, enabling better-informed decision-making across departments.

- Enhanced Analytics: Integrated data sets empower organizations to perform advanced analytics, including predictive modeling, trend analysis, and sentiment analysis, unlocking valuable insights for strategic planning.

- Improved Customer Experience: By consolidating customer data from disparate sources, businesses can gain a deeper understanding of customer behavior and preferences, leading to personalized experiences and targeted marketing initiatives.

- Operational Efficiency: Streamlining data integration processes reduces manual effort and minimizes the risk of errors, enhancing operational efficiency and agility within the organization.

- Competitive Advantage: Organizations that master the art of data integration gain a competitive edge by leveraging data as a strategic asset, driving innovation, and staying ahead of market trends.

Security and Compliance Considerations

In today’s digital age, where data breaches and regulatory scrutiny are constant threats, prioritizing security and compliance is paramount for businesses of all sizes. Whether you’re a small startup or a multinational corporation, understanding and implementing robust security measures while adhering to relevant regulations is non-negotiable. Let’s delve into some essential considerations to ensure your business stays secure and compliant.

- Risk Assessment and Management: Before implementing any security measures, conduct a thorough risk assessment to identify potential vulnerabilities and their potential impact on your business. Assess risks related to data breaches, cyberattacks, regulatory non-compliance, and more. Once identified, develop a comprehensive risk management strategy to mitigate these risks effectively.

- Data Protection: Protecting sensitive data is crucial for maintaining customer trust and complying with data protection regulations like GDPR, CCPA, or HIPAA. Implement encryption protocols, access controls, and regular data backups to safeguard sensitive information from unauthorized access, theft, or loss.

- Cybersecurity Measures: Cyberattacks are becoming increasingly sophisticated, making robust cybersecurity measures indispensable. Invest in firewalls, antivirus software, intrusion detection systems, and employee training programs to defend against malware, phishing attempts, ransomware, and other cyber threats.

- Compliance with Regulations: Stay abreast of relevant regulations and ensure compliance to avoid hefty fines, legal consequences, and reputational damage. Depending on your industry and geographic location, regulations such as GDPR, PCI DSS, SOX, or HIPAA may apply. Establish compliance protocols, appoint a compliance officer, and conduct regular audits to ensure adherence.

- Employee Training and Awareness: Human error remains one of the leading causes of security breaches. Educate employees about cybersecurity best practices, such as creating strong passwords, identifying phishing emails, and following security protocols. Foster a culture of security awareness and encourage employees to report suspicious activities promptly.

- Vendor Management: Many businesses rely on third-party vendors for various services, exposing them to additional security risks. Conduct due diligence when selecting vendors, ensuring they adhere to security and compliance standards. Implement vendor risk management processes, including regular assessments and contractually binding security requirements.

- Incident Response Plan: Despite preventive measures, security incidents may still occur. Establish a robust incident response plan outlining procedures for identifying, containing, and mitigating security breaches. Assign roles and responsibilities, establish communication channels, and conduct regular drills to test the effectiveness of the plan.

- Continuous Monitoring and Improvement: Security is not a one-time effort but a continuous process. Implement mechanisms for continuous monitoring of security controls, threat intelligence, and compliance status. Stay agile and adapt security measures in response to evolving threats and regulatory changes.

- Documentation and Record-Keeping: Maintain thorough documentation of security policies, procedures, and compliance activities. Keep detailed records of security incidents, audits, and compliance assessments. Documentation not only facilitates regulatory compliance but also aids in post-incident analysis and continuous improvement efforts.

- Board and Executive Involvement: Security and compliance are not just IT concerns but strategic business imperatives. Ensure active involvement and oversight from the board and executive leadership. Establish clear lines of communication between the security team and senior management to facilitate decision-making and resource allocation.

Creating Accessible and Intuitive Data Visualization Tools

In today’s data-driven world, the ability to effectively communicate insights through visualizations is paramount. However, these visualizations must not only be visually appealing but also accessible to all users, regardless of their abilities or disabilities. Creating accessible and intuitive data visualization tools ensures that everyone can glean valuable insights from the data presented. Let’s delve into how to achieve this delicate balance.

Understanding Accessibility:

Accessibility in data visualization refers to making sure that the information presented is perceivable, operable, understandable, and robust for all users, including those with disabilities. This includes considerations for individuals with visual, auditory, motor, or cognitive impairments. Here are some key principles to keep in mind:

- Perceivable: Ensure that all users can perceive the information being presented. Provide alternative text descriptions for images, use high contrast colors, and offer options for resizing text.

- Operable: Make sure that users can navigate and interact with the visualization easily. This includes providing keyboard navigation options, clear and consistent navigation structures, and ensuring that interactive elements are easily identifiable.

- Understandable: Ensure that the visualization is clear and easy to understand for all users. Use simple and concise language, provide clear labels and instructions, and avoid using complex visualizations that may be difficult to interpret.

- Robust: Ensure that the visualization is robust and compatible with a variety of assistive technologies and devices. Test the tool with screen readers, keyboard-only navigation, and other assistive technologies to ensure compatibility.

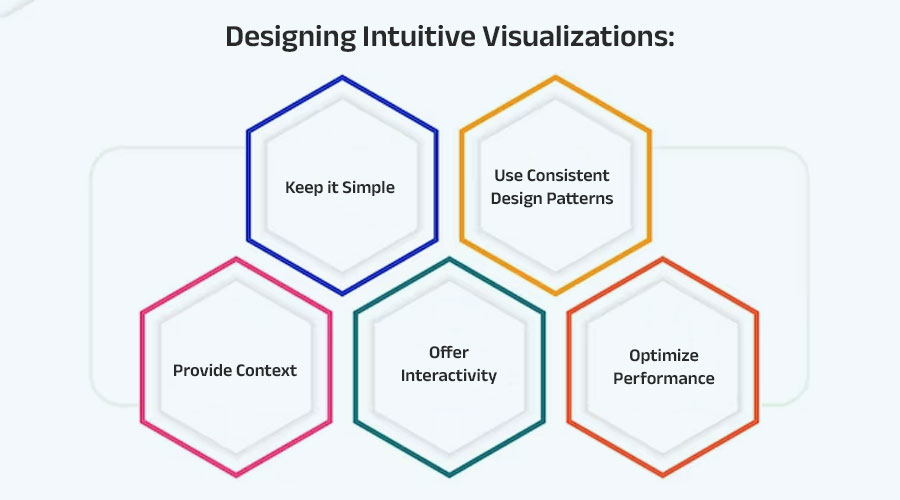

Designing Intuitive Visualizations:

In addition to being accessible, data visualizations should also be intuitive and easy to understand for all users. Here are some tips for designing intuitive visualizations:

- Keep it Simple: Avoid cluttering the visualization with unnecessary elements. Focus on presenting the most important information in a clear and concise manner.

- Use Consistent Design Patterns: Use consistent design patterns and visual cues throughout the visualization to help users understand how to interact with the data.

- Provide Context: Provide context and explain the significance of the data being presented. Use annotations, labels, and captions to provide additional information where necessary.

- Offer Interactivity: Allow users to interact with the visualization to explore the data further. Provide options for filtering, sorting, and drilling down into specific data points.

- Optimize Performance: Ensure that the visualization loads quickly and performs well, even with large datasets. Optimize the code and use efficient rendering techniques to minimize loading times.

Tools and Technologies:

There are many tools and technologies available for creating accessible and intuitive data visualizations. Some popular options include:

- D3.js: A powerful JavaScript library for creating interactive data visualizations.

- Tableau: A data visualization tool that offers a wide range of features for creating accessible visualizations.

- Chart.js: A simple yet versatile JavaScript library for creating responsive and accessible charts.

- Power BI: A business analytics tool that allows users to create interactive reports and dashboards.

When choosing a tool or technology, make sure to consider its accessibility features and compatibility with assistive technologies.

Top enterprise data warehouse from scratch to foster a data-driven culture Companies

In today’s digital age, data has become the lifeblood of organizations, driving strategic decision-making and competitive advantage. To harness the power of data effectively, companies need robust infrastructure, and that’s where enterprise data warehouses (EDWs) come into play. Building an EDW from scratch can be a daunting task, but with the right approach, it can pave the way for a data-driven culture within organizations. Let’s explore the journey of creating a top-notch enterprise data warehouse, step by step.

-

-

Next Big Technology:

Focus Area

- Mobile App Development

- App Designing (UI/UX)

- Software Development

- Web Development

- AR & VR Development

- Big Data & BI

- Cloud Computing Services

- DevOps

- E-commerce Development

Industries Focus

- Art, Entertainment & Music

- Business Services

- Consumer Products

- Designing

- Education

- Financial & Payments

- Gaming

- Government

- Healthcare & Medical

- Hospitality

- Information Technology

- Legal & Compliance

- Manufacturing

- Media

-

- Assess Your Data Needs: Conduct a comprehensive assessment of your data needs. What types of data will your EDW need to handle? Structured, unstructured, or semi-structured? Consider both internal and external data sources that are relevant to your business processes.

- Choose the Right Technology Stack: Selecting the appropriate technology stack is pivotal in building a robust EDW. Factors to consider include scalability, performance, ease of integration, and compatibility with existing systems. Popular choices for EDW platforms include Snowflake, Amazon Redshift, Google BigQuery, and Microsoft Azure Synapse Analytics.

- Design a Scalable Architecture: Designing a scalable architecture is essential to accommodate the growing volume and variety of data. Utilize best practices such as data partitioning, indexing, and data compression to optimize performance and minimize latency.

- Implement Data Governance and Security Measures: Data governance and security should be at the forefront of your EDW implementation. Establish robust policies and procedures to ensure data integrity, privacy, and compliance with regulatory standards such as GDPR and CCPA.

- Integrate Data from Various Sources: Data integration is a critical aspect of EDW implementation. Utilize Extract, Transform, Load (ETL) processes or modern data integration platforms to seamlessly integrate data from diverse sources into your EDW.

- Enable Self-Service Analytics: Empower users across the organization to derive insights from the EDW through self-service analytics tools. Provide intuitive dashboards, visualization tools, and ad-hoc querying capabilities to facilitate data exploration and analysis.

- Drive a Data-Driven Culture: Building a top enterprise data warehouse is not just about technology; it’s about fostering a data-driven culture within the organization. Encourage data literacy, promote collaboration between business and IT teams, and celebrate successes driven by data-driven insights.

- Continuously Monitor and Iterate: The journey of building an EDW doesn’t end once it’s deployed. Continuously monitor performance, usage patterns, and user feedback to identify areas for improvement. Iterate on your EDW architecture and processes to ensure it remains aligned with evolving business needs.

- Embrace Change and Innovation: Lastly, embrace change and innovation as integral parts of your data strategy. The data landscape is constantly evolving, with new technologies and methodologies emerging rapidly. Stay agile and adaptable to leverage emerging trends and stay ahead of the competition.

FAQs On enterprise data warehouse from scratch to foster a data-driven culture

In today’s data-centric world, businesses are increasingly recognizing the value of leveraging enterprise data warehouses (EDWs) to foster a data-driven culture. An EDW serves as a centralized repository for storing, managing, and analyzing vast amounts of data from various sources within an organization. If you’re considering implementing an EDW from scratch or looking to enhance your existing setup, you likely have some burning questions. Fear not! This comprehensive FAQ guide is here to address all your queries and shed light on the journey of building an EDW to empower your organization’s data-driven aspirations.

1. What exactly is an Enterprise Data Warehouse (EDW)? An Enterprise Data Warehouse (EDW) is a centralized repository that integrates data from multiple sources across an organization. It is designed to support business intelligence (BI) and analytics applications by providing a unified view of structured and sometimes unstructured data.

2. Why is fostering a data-driven culture important for businesses? A data-driven culture empowers organizations to make informed decisions based on data insights rather than relying solely on intuition or guesswork. It enhances efficiency, fosters innovation, improves customer experiences, and ultimately drives competitive advantage in today’s dynamic business landscape.

3. What are the key benefits of implementing an EDW? Implementing an EDW offers several benefits, including:

- Centralized data storage: EDWs provide a single source of truth for all organizational data, ensuring consistency and reliability.

- Improved data accessibility: Stakeholders can access and analyze data more easily, facilitating faster decision-making.

- Enhanced data quality: By consolidating data from disparate sources, EDWs help improve data quality and integrity.

- Scalability: EDWs are designed to handle large volumes of data and can scale to accommodate growing business needs.

- Advanced analytics capabilities: EDWs support complex analytics processes, including predictive modeling, machine learning, and data visualization.

4. What are the key components of an enterprise data warehouse architecture? An enterprise data warehouse architecture typically consists of the following components:

- Data sources: These are the systems or applications from which data is extracted and loaded into the EDW.

- ETL (Extract, Transform, Load) processes: ETL processes are used to extract data from source systems, transform it into a suitable format, and load it into the EDW.

- Data storage: The data warehouse itself, where data is stored in a structured format optimized for analytics.

- Data modeling: This involves designing the structure of the data warehouse, including defining tables, relationships, and indexes.

- Analytics and reporting tools: These tools enable users to query, analyze, and visualize data stored in the EDW.

5. What are some best practices for building an EDW from scratch? When building an EDW from scratch, consider the following best practices:

- Define clear objectives and requirements: Clearly define the goals and requirements of your EDW project to ensure alignment with business objectives.

- Engage stakeholders: Involve key stakeholders from across the organization to gather input and ensure the EDW meets their needs.

- Start small and iterate: Begin with a pilot project or proof of concept to validate your approach before scaling up.

- Focus on data quality: Invest in data quality processes and tools to ensure the accuracy and reliability of your data.

- Plan for scalability: Design your EDW architecture with scalability in mind to accommodate future growth and evolving business needs.

6. How can an EDW foster a data-driven culture within an organization? An EDW can foster a data-driven culture by:

- Providing easy access to data: Employees can access relevant data quickly and easily to inform their decision-making processes.

- Encouraging data-driven decision-making: By making data readily available, an EDW encourages employees to base their decisions on data rather than intuition.

- Promoting collaboration: An EDW facilitates collaboration across departments by providing a single source of truth for organizational data.

- Empowering data analysis: With advanced analytics capabilities, an EDW enables employees to perform in-depth analysis and derive actionable insights from data.

7. What are some common challenges associated with implementing an EDW? Common challenges associated with implementing an EDW include:

- Data integration complexities: Integrating data from disparate sources can be complex and time-consuming, requiring careful planning and coordination.

- Performance issues: Poorly designed or optimized EDW architectures can lead to performance issues such as slow query times or data processing bottlenecks.

- Data governance and security concerns: Ensuring data governance and security is crucial to protect sensitive information and maintain compliance with regulations.

- Cultural resistance: Resistance to change and lack of buy-in from employees can hinder the adoption of a data-driven culture within an organization.

Thanks for reading our post “How to develop an enterprise data warehouse from scratch to foster a data-driven culture”. Please connect with us to learn more about Best Top enterprise data warehouse from scratch to foster a data-driven.