Table of Contents

Understanding Generative AI: Fundamentals and Applications

In the realm of artificial intelligence (AI), one of the most intriguing and rapidly evolving fields is Generative AI. It’s not just a buzzword; it’s a technology that’s reshaping how we interact with machines and how machines interact with us. In this article, we will delve deep into the fundamentals of Generative AI, exploring its inner workings, applications, and the transformative potential it holds across various domains.

Unraveling the Basics: What is Generative AI?

Generative AI is a branch of artificial intelligence that focuses on teaching machines to generate content autonomously, often mimicking human-like creativity. Unlike traditional AI systems that are designed for specific tasks, such as classification or prediction, generative AI models have the capability to create new content from scratch. These models are trained on vast datasets and learn the underlying patterns and structures to generate outputs that are both realistic and novel.

The Core Techniques Behind Generative AI

Generative AI employs various techniques to generate content, with the most notable being:

- Generative Adversarial Networks (GANs): Introduced by Ian Goodfellow and his colleagues in 2014, GANs consist of two neural networks – a generator and a discriminator – that engage in a competitive game. The generator creates synthetic data samples, while the discriminator tries to distinguish between real and fake samples. Through this adversarial process, GANs learn to generate increasingly realistic outputs.

- Variational Autoencoders (VAEs): VAEs are another popular approach in generative modeling. They work by learning the underlying probability distribution of input data and then generating new samples by sampling from this learned distribution. VAEs are known for their ability to produce diverse outputs and are widely used in applications such as image generation and natural language processing.

Applications of Generative AI

Generative AI has found applications across a wide range of domains, revolutionizing industries and enabling novel experiences. Some of the notable applications include:

- Image Generation and Editing: Generative models like GANs have been used to create realistic images, generate artistic designs, and even alter existing photographs seamlessly.

- Text Generation and Natural Language Processing (NLP): Generative models are capable of generating human-like text, enabling applications such as chatbots, language translation, and content creation.

- Drug Discovery and Molecular Design: In the pharmaceutical industry, generative AI is being used to discover new drug candidates and design molecules with desired properties, speeding up the drug development process.

- Content Creation and Creative Industries: From generating music compositions to designing fashion trends, generative AI is pushing the boundaries of creativity in various artistic fields.

Future Perspectives and Challenges

While generative AI holds immense promise, it also poses significant challenges, particularly in areas such as ethical considerations, bias mitigation, and ensuring the safety and reliability of generated content. As the technology continues to advance, addressing these challenges will be crucial in realizing its full potential and fostering responsible AI innovation.

Defining Your Objectives: Clear Goals for Your Generative AI Solution

In the dynamic landscape of artificial intelligence (AI), harnessing the power of generative AI solutions holds immense promise for businesses across industries. However, amidst this excitement, defining clear objectives becomes paramount for ensuring the efficacy and success of such endeavors. In this article, we delve into the significance of setting precise goals for your generative AI solution and provide a comprehensive guide to crafting them effectively.

Understanding Generative AI Solutions

Generative AI solutions are revolutionizing various domains by enabling machines to autonomously produce content, designs, or solutions based on input data or parameters. From creating art and music to generating text or even designing products, the capabilities of generative AI are vast and diverse.

The Importance of Clear Objectives

Before diving into the development and deployment of a generative AI solution, it’s essential to establish clear objectives. These objectives serve as guiding principles, steering the direction of the project and ensuring alignment with broader organizational goals. Clear objectives offer several benefits:

- Focus and Clarity: Defined objectives provide a clear vision of what the AI solution aims to achieve, eliminating ambiguity and preventing scope creep.

- Measurable Outcomes: Well-defined objectives enable the quantification of success, allowing stakeholders to track progress and evaluate the effectiveness of the solution.

- Resource Optimization: Clear objectives aid in resource allocation, ensuring that time, talent, and financial resources are invested judiciously to attain desired outcomes.

- Alignment with Stakeholders: Establishing objectives facilitates alignment among stakeholders, including executives, developers, and end-users, fostering collaboration and shared understanding.

Crafting Clear Objectives

Crafting clear objectives involves a systematic approach that encompasses understanding organizational needs, defining specific goals, and delineating key performance indicators (KPIs). Here’s a step-by-step guide:

- Identify Organizational Needs: Begin by understanding the overarching goals and challenges faced by your organization. Consider how a generative AI solution can address these needs and add value.

- Define Specific Goals: Break down the broad organizational objectives into specific, actionable goals that the generative AI solution will accomplish. These goals should be precise, measurable, achievable, relevant, and time-bound (SMART).

- Prioritize Objectives: Not all goals are created equal. Prioritize objectives based on their importance, feasibility, and potential impact on business outcomes. Focus on high-priority goals that align closely with strategic initiatives.

- Establish KPIs: Once objectives are defined, establish Key Performance Indicators (KPIs) to quantitatively measure progress and success. KPIs could include metrics such as accuracy, efficiency, user satisfaction, or ROI.

- Iterate and Refine: Objectives are not set in stone. Continuously review and refine them based on evolving business needs, technological advancements, and feedback from stakeholders. Flexibility and adaptability are key.

Choosing the Right Model Architecture: From GANs to Variational Autoencoders

In the bustling realm of artificial intelligence and machine learning, selecting the apt model architecture stands as a pivotal decision. Among the myriad options, Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) emerge as two prominent contenders, each with its distinctive features and applications. Understanding the nuances of these architectures is essential for steering your project in the right direction.

Decoding GANs: Crafting Realistic Data

Generative Adversarial Networks, introduced by Ian Goodfellow and his colleagues in 2014, revolutionized the landscape of generative modeling. GANs operate on a simple yet ingenious principle of pitting two neural networks against each other – the generator and the discriminator. The generator fabricates synthetic data instances, while the discriminator discerns between real and fake data. Through a continuous adversarial process, GANs iteratively refine the generated samples, ultimately producing remarkably authentic outputs.

GANs find extensive utility in image synthesis, style transfer, and even text generation. Their ability to capture intricate data distributions and produce high-fidelity outputs makes them indispensable in creative domains like art generation and video manipulation.

Unraveling VAEs: Bridging Generative and Inference Models

In contrast, Variational Autoencoders (VAEs) offer a different approach to generative modeling. Rooted in Bayesian inference and variational methods, VAEs aim to learn a latent representation of input data. Comprising an encoder and a decoder network, VAEs facilitate the generation of new data points by sampling from the learned latent space.

What distinguishes VAEs is their emphasis on probabilistic modeling. By approximating the posterior distribution of latent variables, VAEs enable principled generation and manipulation of data while accounting for uncertainty. This makes them particularly suitable for tasks involving data generation under constrained conditions and anomaly detection.

Choosing the Right Architecture: Factors to Consider

Selecting between GANs and VAEs hinges on various factors, including:

- Data Characteristics: GANs excel in generating high-resolution, visually appealing data such as images, whereas VAEs are adept at handling structured data and accommodating uncertainty.

- Training Stability: GAN training can be notoriously unstable, requiring careful tuning of hyperparameters and monitoring. VAEs, on the other hand, offer a more stable training process, making them suitable for applications where reliability is paramount.

- Interpretability: VAEs provide a clearer interpretation of the latent space, enabling better understanding of the underlying data distribution. GANs, while proficient in generating realistic outputs, offer less interpretability due to the implicit nature of the learned representation.

- Application Requirements: Consider the specific demands of your application. If your goal is to generate visually appealing content with minimal constraints, GANs might be the preferred choice. Conversely, if you require explicit control over data generation or desire uncertainty quantification, VAEs offer a more suitable solution.

Evaluation Metrics: Assessing Performance and Progress

In today’s dynamic and competitive world, evaluating performance and progress is essential for individuals, teams, and organizations to thrive. Whether you’re measuring the success of a marketing campaign, assessing employee performance, or evaluating the effectiveness of educational programs, the use of evaluation metrics is crucial. In this article, we’ll delve into the significance of evaluation metrics, explore different types, and discuss how to effectively utilize them to gauge performance and progress.

Importance of Evaluation Metrics

Evaluation metrics serve as yardsticks for measuring performance and progress towards predefined goals and objectives. They provide valuable insights into what is working well and what areas need improvement. By establishing clear metrics, individuals and organizations can track their performance over time, identify trends, and make data-driven decisions to optimize their strategies.

Types of Evaluation Metrics

- Quantitative Metrics: These metrics involve numerical data and are easily quantifiable. Examples include sales revenue, customer acquisition cost, website traffic, and conversion rates. Quantitative metrics provide concrete measurements that allow for straightforward analysis and comparison.

- Qualitative Metrics: Unlike quantitative metrics, qualitative metrics are subjective and focus on the quality rather than the quantity of outcomes. Examples include customer satisfaction scores, brand perception, and employee morale. While qualitative metrics may be more challenging to measure, they offer valuable insights into aspects of performance that cannot be easily quantified.

- Key Performance Indicators (KPIs): KPIs are specific metrics that are directly tied to organizational objectives. They help organizations assess their progress towards strategic goals. Common KPIs vary across industries and may include metrics such as sales targets, customer retention rates, and employee productivity.

- Leading Indicators vs. Lagging Indicators: Leading indicators are predictive metrics that anticipate future performance, while lagging indicators measure past performance. For example, in financial analysis, revenue growth may be considered a leading indicator, while profit margin is a lagging indicator.

Effective Utilization of Evaluation Metrics

- Define Clear Objectives: Before selecting evaluation metrics, it’s essential to establish clear and measurable objectives. Clearly defined objectives provide a framework for selecting relevant metrics and ensure alignment with overarching goals.

- Select Appropriate Metrics: Choose metrics that are relevant to the objectives being evaluated and align with the organization’s priorities. Avoid overloading with metrics; focus on those that provide meaningful insights into performance and progress.

- Set Benchmarks and Targets: Establish benchmarks and targets to provide context for evaluation metrics. Benchmarks serve as reference points for comparison, while targets define desired levels of performance. Regularly review benchmarks and targets to ensure they remain relevant and achievable.

- Collect and Analyze Data: Implement systems to collect relevant data accurately and consistently. Utilize analytics tools and software to streamline data collection and analysis processes. Regularly analyze data to identify trends, patterns, and areas for improvement.

- Iterate and Improve: Evaluation metrics should not be static; they should evolve with changing circumstances and objectives. Continuously review and refine metrics based on feedback and insights gained from data analysis. Iterate on strategies to improve performance and achieve desired outcomes.

Scaling Up: Transitioning from Prototype to Full-Scale Implementation

Innovation is the heartbeat of progress, fueled by the relentless pursuit of improvement. At the heart of this journey lies the prototype – a tangible manifestation of an idea, a beacon of potential. Yet, the true test of innovation lies in its ability to transcend the confines of the prototype phase and embrace the challenges of full-scale implementation. This journey, often referred to as scaling up, is a pivotal moment in the life cycle of any innovation. It’s a transition marked by excitement, anticipation, and a fair share of hurdles to overcome.

Scaling up is not merely about replicating the success of a prototype on a larger scale; it’s a strategic endeavor that requires careful planning, foresight, and adaptability. Here’s a roadmap to guide you through this transformative process:

- Evaluate and Refine: Begin by critically evaluating your prototype. Identify its strengths, weaknesses, and areas for improvement. Solicit feedback from stakeholders, end-users, and experts in the field. Use this feedback to refine your prototype, ensuring that it is robust, reliable, and ready for scaling.

- Define Clear Objectives: Set clear and measurable objectives for the scaling process. What are you hoping to achieve by scaling up? Whether it’s increased efficiency, expanded reach, or enhanced functionality, clearly define your goals and align them with your broader strategic vision.

- Develop a Scalable Infrastructure: Scaling up requires more than just replicating what worked in the prototype phase. It demands the development of a scalable infrastructure capable of supporting growth and evolution. Invest in robust systems, processes, and technologies that can accommodate increased demand and complexity.

- Secure Funding: Scaling up requires financial resources to support expanded operations, production, and marketing efforts. Secure the necessary funding through a combination of sources, including venture capital, grants, loans, and strategic partnerships. Articulate a compelling business case that highlights the potential for scalability and returns on investment.

- Mitigate Risks: Scaling up inevitably involves risks, ranging from supply chain disruptions to market volatility. Identify potential risks and develop mitigation strategies to minimize their impact. This may involve diversifying suppliers, implementing contingency plans, or hedging against market fluctuations.

- Engage Stakeholders: Effective communication and collaboration are essential for successful scaling up. Engage stakeholders at every stage of the process, from conceptualization to implementation. Foster a culture of transparency, trust, and accountability, ensuring that all parties are aligned and invested in the success of the scaling initiative.

- Iterate and Adapt: Scaling up is not a one-time event but an ongoing journey of iteration and adaptation. Continuously monitor performance metrics, gather feedback, and iterate on your approach. Be prepared to pivot and course-correct as needed, embracing change as an opportunity for growth and learning.

Data Management and Security: Safeguarding Sensitive Information

In today’s digital age, where information is a valuable asset, safeguarding sensitive data has become paramount for businesses and individuals alike. With the exponential growth of data generation and the increasing sophistication of cyber threats, effective data management and security measures are essential to protect sensitive information from unauthorized access, misuse, or theft.

Data management encompasses the processes and technologies used to collect, store, organize, and utilize data efficiently. It involves not only the storage and retrieval of data but also ensuring its accuracy, integrity, and accessibility. Effective data management lays the foundation for robust security practices, as it enables organizations to identify and classify sensitive information, determine who has access to it, and implement appropriate security controls.

One of the key elements of data management is data encryption. Encryption converts data into a format that can only be read or understood by authorized parties, thus preventing unauthorized access even if the data is intercepted. By encrypting sensitive information both in transit and at rest, organizations can significantly reduce the risk of data breaches and protect the confidentiality of their data.

Another crucial aspect of data management and security is access control. Access control mechanisms ensure that only authorized individuals or systems are granted access to sensitive data. This can be achieved through user authentication methods such as passwords, biometric authentication, or multi-factor authentication. Additionally, role-based access control (RBAC) allows organizations to assign specific access rights to users based on their roles and responsibilities within the organization, limiting the exposure of sensitive data to only those who need it to perform their duties.

Furthermore, data management involves implementing data loss prevention (DLP) measures to prevent the unauthorized transmission of sensitive information outside the organization’s network. DLP solutions can monitor and enforce policies to detect and block attempts to transfer sensitive data through email, web applications, or removable storage devices. By proactively identifying and preventing data leaks, organizations can mitigate the risk of inadvertent or malicious data breaches.

Data management and security also extend to the proper disposal of data. When sensitive information reaches the end of its lifecycle or is no longer needed, it must be securely deleted or destroyed to prevent unauthorized access or misuse. Data destruction methods such as overwriting, degaussing, or physical destruction of storage media ensure that sensitive data cannot be recovered once it is no longer required.

Training and Fine-Tuning: Optimization for Enhanced Performance

In today’s digital age, where information is a valuable asset, safeguarding sensitive data has become paramount for businesses and individuals alike. With the exponential growth of data generation and the increasing sophistication of cyber threats, effective data management and security measures are essential to protect sensitive information from unauthorized access, misuse, or theft.

Data management encompasses the processes and technologies used to collect, store, organize, and utilize data efficiently. It involves not only the storage and retrieval of data but also ensuring its accuracy, integrity, and accessibility. Effective data management lays the foundation for robust security practices, as it enables organizations to identify and classify sensitive information, determine who has access to it, and implement appropriate security controls.

One of the key elements of data management is data encryption. Encryption converts data into a format that can only be read or understood by authorized parties, thus preventing unauthorized access even if the data is intercepted. By encrypting sensitive information both in transit and at rest, organizations can significantly reduce the risk of data breaches and protect the confidentiality of their data.

Another crucial aspect of data management and security is access control. Access control mechanisms ensure that only authorized individuals or systems are granted access to sensitive data. This can be achieved through user authentication methods such as passwords, biometric authentication, or multi-factor authentication. Additionally, role-based access control (RBAC) allows organizations to assign specific access rights to users based on their roles and responsibilities within the organization, limiting the exposure of sensitive data to only those who need it to perform their duties.

Furthermore, data management involves implementing data loss prevention (DLP) measures to prevent the unauthorized transmission of sensitive information outside the organization’s network. DLP solutions can monitor and enforce policies to detect and block attempts to transfer sensitive data through email, web applications, or removable storage devices. By proactively identifying and preventing data leaks, organizations can mitigate the risk of inadvertent or malicious data breaches.

Data management and security also extend to the proper disposal of data. When sensitive information reaches the end of its lifecycle or is no longer needed, it must be securely deleted or destroyed to prevent unauthorized access or misuse. Data destruction methods such as overwriting, degaussing, or physical destruction of storage media ensure that sensitive data cannot be recovered once it is no longer required.

Deployment Considerations: Integration into Existing Systems and Infrastructure

In the ever-evolving landscape of technology, deploying new systems or software into existing infrastructure is a critical endeavor for businesses aiming to stay competitive and innovative. However, this process requires careful consideration and planning to ensure a seamless integration without disrupting ongoing operations. From compatibility issues to scalability concerns, navigating deployment considerations demands a strategic approach. Let’s delve into some key factors to ponder when integrating new solutions into your existing systems and infrastructure.

1. Compatibility Assessment: Before embarking on any deployment journey, it’s essential to conduct a thorough compatibility assessment. Evaluate how the new system aligns with your current infrastructure, including hardware, software, and network configurations. Identify any potential conflicts or dependencies that may arise during integration and devise strategies to address them proactively.

2. Scalability and Performance: Scalability is paramount, especially in today’s dynamic business environment. Consider how the new system will accommodate future growth and increased demand. Assess its performance under different workloads and ensure that it can scale seamlessly without compromising efficiency or user experience. Implementing scalable solutions ensures long-term viability and minimizes the need for frequent overhauls.

3. Data Migration and Interoperability: Smooth data migration is crucial for preserving valuable information and ensuring continuity throughout the deployment process. Evaluate the compatibility of data formats, structures, and protocols between the existing and new systems. Implement robust data migration strategies to minimize downtime and data loss while maintaining data integrity. Additionally, prioritize interoperability to facilitate seamless communication and data exchange between disparate systems.

4. Security and Compliance: Security should be a top priority when integrating new systems into existing infrastructure. Assess the security features and protocols of the new solution to ensure compliance with industry standards and regulations. Implement robust security measures, such as encryption, access controls, and intrusion detection systems, to safeguard sensitive data and protect against potential threats. Conduct thorough security audits and risk assessments to identify and mitigate vulnerabilities before deployment.

5. Training and Change Management: Introducing new systems often necessitates a cultural shift within the organization. Invest in comprehensive training programs to familiarize employees with the new technology and equip them with the necessary skills to leverage its full potential. Implement effective change management strategies to minimize resistance to change and foster a culture of adaptability and innovation. Engage stakeholders throughout the deployment process to solicit feedback and address concerns proactively.

6. Cost Analysis and Return on Investment (ROI): Deploying new systems involves significant financial investment, so it’s essential to conduct a comprehensive cost-benefit analysis. Evaluate the total cost of ownership, including acquisition, implementation, maintenance, and operational expenses, against the expected benefits and ROI. Consider both short-term gains and long-term value to make informed decisions about deployment strategies and resource allocation.

Ethical Considerations: Addressing Bias and Fairness in Generative Models

In the era of artificial intelligence (AI), the development and application of generative models have seen unprecedented growth, revolutionizing industries ranging from healthcare to entertainment. These models, driven by powerful algorithms, hold immense potential to create novel content, generate realistic images, and even assist in drug discovery. However, amidst this technological advancement, there lies a pressing concern: the ethical implications of bias and fairness in generative models.

Understanding Bias in Generative Models:

Bias in AI systems, including generative models, arises from various sources, including biased training data, algorithmic design, and societal prejudices. When biased data is used to train these models, they tend to replicate and amplify existing societal biases, perpetuating discrimination and marginalization.

For instance, if a generative model is trained on a dataset that predominantly represents one demographic group, it may struggle to accurately represent or generate content relevant to other groups. This can lead to underrepresentation or misrepresentation, reinforcing stereotypes and exacerbating societal inequalities.

Addressing bias requires a multifaceted approach, starting from data collection and preprocessing to algorithmic design and model evaluation. It involves ensuring diversity and representativeness in training datasets, implementing fairness-aware algorithms, and conducting rigorous testing to identify and mitigate bias at every stage of development.

Fostering Fairness in Generative Models:

Fairness in generative models goes beyond simply avoiding biased outcomes; it entails ensuring equitable outcomes for all individuals, regardless of their demographic characteristics. Achieving fairness involves designing models that prioritize inclusivity, transparency, and accountability.

One approach to fostering fairness is through the implementation of fairness-aware algorithms that explicitly consider fairness constraints during model training and generation. These algorithms aim to mitigate biases and promote equitable outcomes by incorporating fairness metrics into the optimization process.

Furthermore, promoting transparency and explainability in generative models is crucial for ensuring accountability and enabling stakeholders to understand how decisions are made. By providing insights into the model’s inner workings and potential biases, transparency empowers users to assess the fairness of model outputs and intervene when necessary.

Ethical Considerations and Societal Impact:

The ethical implications of bias and fairness in generative models extend beyond technical considerations; they have profound societal implications that shape our collective future. Biased AI systems can perpetuate discrimination, amplify existing inequalities, and erode trust in AI technologies.

Conversely, fair and unbiased generative models have the potential to foster inclusivity, promote diversity, and contribute to positive social change. By prioritizing ethical considerations and addressing bias and fairness concerns, we can harness the transformative power of AI to build a more equitable and just society.

Future Developments: Anticipating Trends and Evolving Technologies in Generative AI

In the intricate web of technological advancements, one realm stands out with unparalleled promise and intrigue – Generative Artificial Intelligence (AI). As we gaze into the horizon of future developments, the landscape of Generative AI unfolds with captivating prospects, offering a glimpse into the transformative journey ahead.

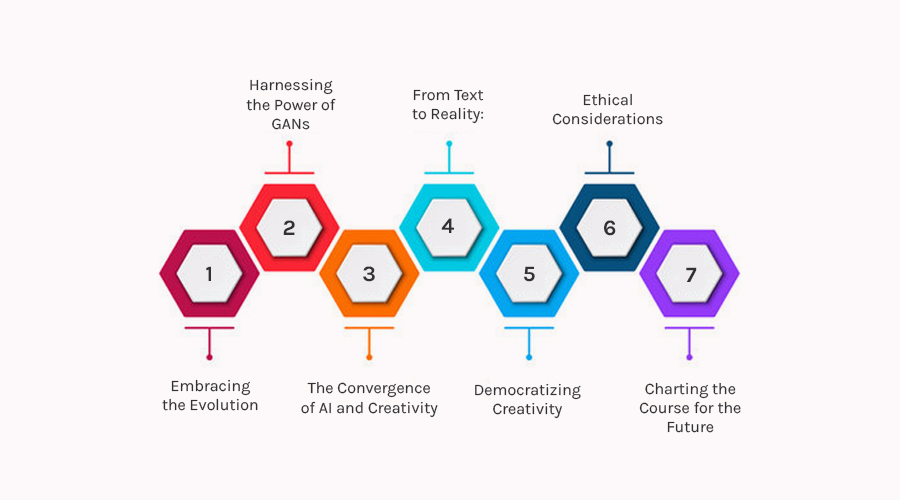

Embracing the Evolution: Generative AI, characterized by its ability to create content autonomously, has transcended conventional boundaries, catalyzing innovations across diverse domains. From generating realistic images and videos to composing music and crafting literature, its applications are boundless, and its potential infinite.

Harnessing the Power of GANs: At the forefront of this evolution lie Generative Adversarial Networks (GANs), a paradigm-shifting concept introduced by Ian Goodfellow and his team in 2014. GANs have become the cornerstone of generative AI, facilitating the creation of authentic and intricate outputs by pitting two neural networks against each other – the generator and the discriminator.

The Convergence of AI and Creativity: In the realm of creative expression, Generative AI is poised to redefine the boundaries of human imagination. Artists, designers, and storytellers are leveraging AI-powered tools to augment their creativity, ushering in an era where man and machine collaborate harmoniously to produce awe-inspiring works of art.

From Text to Reality: Advancements in Natural Language Processing (NLP) Natural Language Processing (NLP) has emerged as a driving force behind the evolution of Generative AI, enabling machines to comprehend, interpret, and generate human language with unprecedented accuracy and fluency. With the advent of models like GPT (Generative Pre-trained Transformer), the boundaries of linguistic creativity are continuously being pushed, heralding a new era of natural and engaging human-machine interaction.

Democratizing Creativity: Accessible Tools for All As Generative AI matures, efforts are underway to democratize access to its transformative capabilities. Open-source platforms, user-friendly interfaces, and community-driven initiatives are empowering individuals from diverse backgrounds to harness the power of AI in their creative endeavors, fostering a culture of inclusivity and collaboration.

Ethical Considerations: Navigating the Path AheadAmidst the excitement surrounding the advancements in Generative AI, it is imperative to address the ethical implications that accompany its proliferation. Issues related to data privacy, algorithmic bias, and the potential misuse of AI-generated content necessitate a nuanced and proactive approach to ensure that these technologies are developed and deployed responsibly.

Charting the Course for the Future: As we embark on this journey of exploration and innovation, the future of Generative AI appears both exhilarating and enigmatic. With each breakthrough and every milestone, we inch closer to a world where the boundaries between human creativity and artificial intelligence blur, giving rise to new possibilities and unforeseen opportunities.

Top Generative AI Solution Companies

In the realm of artificial intelligence (AI), the emergence of generative AI solutions has sparked a revolution across various industries. These cutting-edge technologies are not only transforming processes but also redefining possibilities. Here, we delve into the top generative AI solution companies that are leading this transformative wave.

-

-

Next Big Technology:

Focus Area

- Mobile App Development

- App Designing (UI/UX)

- Software Development

- Web Development

- AR & VR Development

- Big Data & BI

- Cloud Computing Services

- DevOps

- E-commerce Development

Industries Focus

- Art, Entertainment & Music

- Business Services

- Consumer Products

- Designing

- Education

- Financial & Payments

- Gaming

- Government

- Healthcare & Medical

- Hospitality

- Information Technology

- Legal & Compliance

- Manufacturing

- Media

-

- IBM: With their Watson AI platform, IBM has been a pioneer in generative AI solutions. Watson’s capabilities span various domains, including healthcare, finance, and cybersecurity. Through advanced natural language understanding and generation, IBM empowers businesses to make data-driven decisions and enhance customer experiences.

- Google: Google’s research in generative AI has led to remarkable developments, particularly with projects like BERT (Bidirectional Encoder Representations from Transformers) and Transformer models. These technologies have revolutionized search engines, language translation, and content generation, making information more accessible and understandable across languages and cultures.

- Microsoft: Microsoft’s Azure AI platform offers a suite of generative AI tools and services, enabling businesses to leverage the power of AI for innovation and growth. From conversational AI to image generation, Microsoft’s solutions are driving advancements in diverse fields, including healthcare, retail, and manufacturing.

- NVIDIA: NVIDIA’s expertise in graphics processing units (GPUs) has positioned them as a key player in generative AI research and development. Their deep learning frameworks and hardware accelerators have empowered researchers and developers to create AI models capable of generating realistic images, videos, and even entire virtual environments.

- Adobe: Adobe’s Sensei platform integrates generative AI capabilities into creative tools like Photoshop and Premiere Pro, revolutionizing digital content creation. By automating repetitive tasks and offering intelligent suggestions, Adobe empowers creatives to unleash their imagination and bring their ideas to life more efficiently.

- Salesforce: Salesforce leverages generative AI to enhance customer relationship management (CRM) systems and streamline business operations. Their Einstein AI platform offers predictive analytics and personalized recommendations, empowering organizations to deliver exceptional customer experiences and drive revenue growth.

- DeepMind: Acquired by Google, DeepMind continues to push the boundaries of generative AI through projects like AlphaGo and AlphaFold. Their research in reinforcement learning and protein folding has profound implications for fields ranging from gaming to drug discovery, demonstrating the transformative potential of AI-driven innovation.

FAQs On Generative AI Solution

In the realm of technology, generative AI solutions have emerged as a revolutionary force, transforming various industries with their ability to create, innovate, and automate. However, with innovation comes curiosity and inquiries. Here, we address some of the frequently asked questions regarding generative AI solutions to provide clarity and insight into this cutting-edge technology.

1. What is Generative AI? Generative AI refers to a branch of artificial intelligence that focuses on creating new content, such as images, text, music, and even videos, based on patterns and data it has learned. Unlike traditional AI, which typically performs tasks based on predefined rules, generative AI can produce original and creative outputs autonomously.

2. How does Generative AI work? Generative AI utilizes neural networks, a type of machine learning algorithm inspired by the human brain, to generate content. These networks are trained on vast amounts of data, learning the underlying patterns and structures. Once trained, they can generate new content by extrapolating from the learned patterns, often producing outputs that mimic the style and characteristics of the training data.

3. What are the applications of Generative AI? Generative AI finds applications across various domains, including:

- Art and Design: Generating artwork, designs, and creative content.

- Content Creation: Producing articles, stories, and scripts.

- Media Production: Creating music, videos, and special effects.

- Gaming: Generating virtual environments, characters, and narratives.

- Healthcare: Assisting in medical image analysis and drug discovery.

- Finance: Generating financial reports, forecasts, and trading strategies.

4. Are Generative AI solutions capable of ethical considerations? Ethical considerations are crucial in the development and deployment of generative AI solutions. Developers must ensure that their algorithms do not propagate biases present in the training data and that generated content adheres to ethical standards. Additionally, there are ongoing discussions within the AI community regarding the responsible use of generative AI, particularly concerning issues such as privacy, misinformation, and intellectual property rights.

5. Can Generative AI solutions replace human creativity? While generative AI can produce impressive and creative outputs, it is not intended to replace human creativity. Instead, it serves as a tool to augment human creativity and productivity. Human input is still essential in guiding and refining the outputs generated by AI, adding context, emotion, and subjective judgment that machines currently lack.

6. How can businesses leverage Generative AI solutions? Businesses can leverage generative AI solutions in various ways to enhance their operations and offerings. For instance, they can use AI-generated content for marketing campaigns, product design, and personalized customer experiences. Additionally, generative AI can streamline processes such as data analysis, content generation, and decision-making, leading to greater efficiency and innovation.

7. What are the challenges associated with Generative AI? Despite its potential, generative AI also poses several challenges, including:

- Quality Control: Ensuring the quality and reliability of generated content.

- Data Privacy: Protecting sensitive data used in training generative models.

- Ethical Concerns: Addressing ethical implications, such as bias and misinformation.

- Regulatory Compliance: Adhering to regulations governing AI technologies.

- Security Risks: Guarding against potential misuse and exploitation of generative AI systems.

Thanks for reading our post “How To Build A Generative AI Solution – From Prototyping To Production”. Please connect with us to learn more about Best A Generative AI Solution.