Table of Contents

Pre-Scaling Challenges: Why the App Was Struggling

Server response times increased exponentially as user count approached 5,000

Before we could scale the app to 100k users, we needed to understand what was holding it back. Our initial audit revealed several critical bottlenecks:

Database Overload

The app was using a single PostgreSQL instance with poorly optimized queries. Transaction categorization algorithms were running directly in the database, causing CPU utilization to spike to 95% during peak hours. Simple dashboard requests were taking up to 8 seconds to complete.

Monolithic Architecture

The entire application ran as a single Node.js service. This meant that resource-intensive operations like generating monthly reports would block other critical functions. The codebase had become difficult to maintain with over 150,000 lines of code in one repository.

Inefficient Caching

The app lacked a proper caching strategy, recalculating the same data for each user request. User profile data, transaction categories, and spending insights were being regenerated with every API call, creating unnecessary database load.

“We knew we had a great product that users loved, but our infrastructure was holding us back. We couldn’t onboard new users without degrading the experience for everyone else.”

Additionally, the client was facing significant user retention issues. Analytics showed that 30% of users were abandoning the app during peak hours due to slow load times and frequent timeouts. Their cloud infrastructure costs were also increasing exponentially without corresponding growth in user numbers.

Facing similar scaling challenges?

Download our free App Scaling Checklist to identify your application’s bottlenecks

Our Step-by-Step Scaling Strategy

After thoroughly analyzing the application’s architecture and performance metrics, we developed a comprehensive scaling strategy. Our approach focused on addressing immediate bottlenecks while building a foundation that could support future growth beyond 100k users.

Phase 1: Database Optimization and Separation (Weeks 1-3)

Our first priority was addressing the database bottlenecks, as this was the most immediate constraint on scaling. We implemented the following changes:

- Query Optimization: We identified and rewrote the 20 most resource-intensive queries, reducing their execution time by 80% on average.

- Database Indexing: We added strategic indexes to the most frequently accessed tables, particularly for transaction data and user profiles.

- Read Replicas: We set up two read replicas to offload reporting and analytics queries from the primary database.

- Data Partitioning: We implemented time-based partitioning for transaction data, significantly improving query performance for historical analysis.

Key Win: Database query optimization alone reduced server load by 40% and improved average response times from 3.2 seconds to 0.4 seconds.

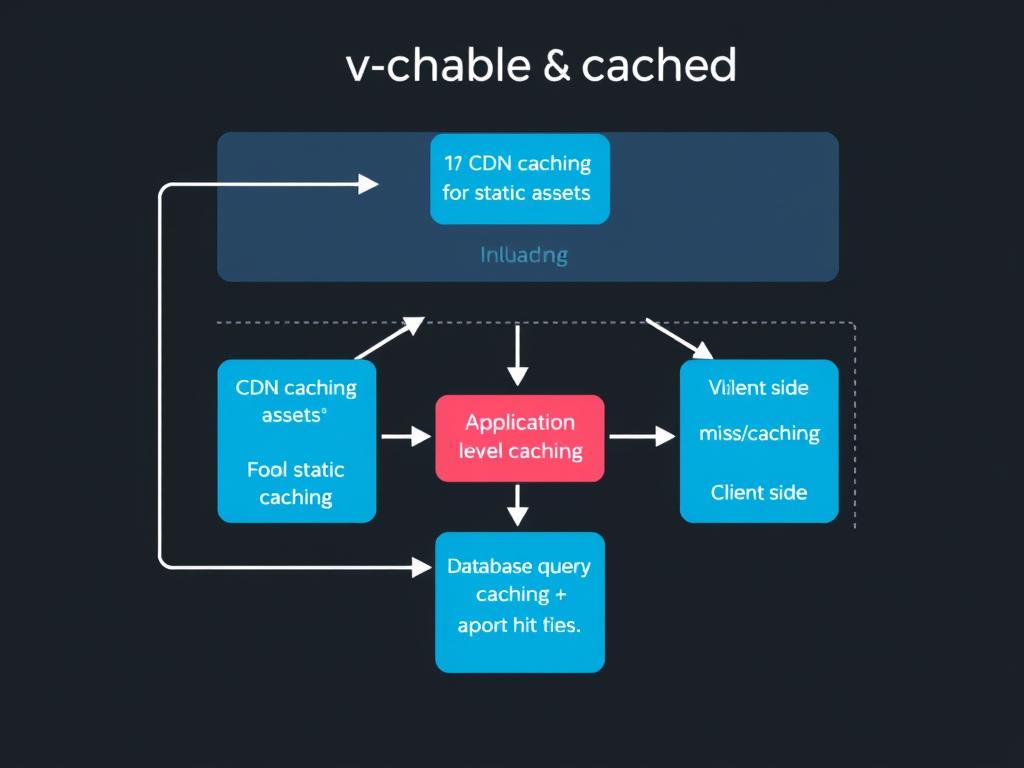

Phase 2: Implementing Effective Caching (Weeks 3-5)

With the database bottlenecks addressed, we implemented a multi-layered caching strategy:

- Redis Implementation: We deployed Redis to cache frequently accessed data, including user profiles, transaction categories, and spending insights.

- CDN Integration: Static assets were moved to a CDN, reducing load on the application servers.

- Client-Side Caching: We implemented strategic browser caching for appropriate resources and state management.

- Cache Invalidation Strategy: We developed a smart invalidation system to ensure data consistency while maximizing cache efficiency.

This caching implementation had an immediate impact on performance. Dashboard load times decreased from 3+ seconds to under 300ms for most users, and server load dropped significantly even as we began adding more users.

Phase 3: Breaking the Monolith (Weeks 5-12)

The next critical step was breaking down the monolithic architecture into more manageable, independently scalable services:

| Service | Responsibility | Technology | Scaling Pattern |

| Core API | User authentication, basic CRUD operations | Node.js, Express | Horizontal scaling with load balancer |

| Transaction Service | Processing and categorizing transactions | Node.js, RabbitMQ | Worker pool with queue |

| Analytics Engine | Generating insights and reports | Python, Pandas | Scheduled jobs with caching |

| Notification Service | Push, email, and in-app notifications | Node.js, Redis | Event-driven with queue |

The migration to microservices was done incrementally, with each service being extracted, tested, and deployed without disrupting the existing application. We used the following approach:

- Extract service code and create a new repository

- Set up CI/CD pipeline for the new service

- Deploy the service alongside the monolith

- Implement an API gateway to route requests

- Gradually shift traffic from the monolith to the new service

- Monitor performance and fix issues

- Complete the migration once stability is confirmed

// Example API Gateway routing logic

const express = require('express');

const { createProxyMiddleware } = require('http-proxy-middleware');

const app = express();

// Route to new Transaction Service

app.use('/api/transactions', createProxyMiddleware({

target: 'http://transaction-service:3000',

changeOrigin: true,

pathRewrite: {

'^/api/transactions': '/transactions',

},

}));

// Default route to legacy monolith

app.use('*', createProxyMiddleware({

target: 'http://legacy-monolith:3000',

changeOrigin: true,

}));

app.listen(8080);Phase 4: Infrastructure Modernization (Weeks 12-18)

With the application architecture improved, we modernized the infrastructure to support continued scaling:

Containerization

We containerized all services using Docker and implemented Kubernetes for orchestration. This provided consistent environments across development and production while enabling auto-scaling based on demand.

Load Balancing

We implemented an Nginx-based load balancing solution with sticky sessions where needed. This distributed traffic evenly across service instances and provided failover capabilities.

Monitoring & Alerting

We deployed a comprehensive monitoring stack with Prometheus and Grafana, along with automated alerting for performance issues and anomalies.

Kubernetes auto-scaling in action as user count increased

Phase 5: UX Optimization and Progressive Loading (Weeks 18-24)

The final phase focused on optimizing the user experience to maintain high engagement as user numbers grew:

- Progressive Loading: We implemented skeleton screens and progressive loading patterns to give users immediate feedback while data loaded.

- Offline Capabilities: We added offline functionality for core features using service workers, reducing dependency on constant network connectivity.

- Performance Budgets: We established strict performance budgets for each screen and component, ensuring the app remained fast as new features were added.

- Optimized Assets: We reduced bundle sizes through code splitting, lazy loading, and image optimization.

“The UX improvements were just as important as the backend optimizations. Users don’t care about your architecture—they care about how the app feels. The perception of speed is sometimes more important than actual speed.”

Key Metrics: Measuring Our Success

Throughout the scaling process, we tracked several key metrics to measure our progress and identify areas for further optimization:

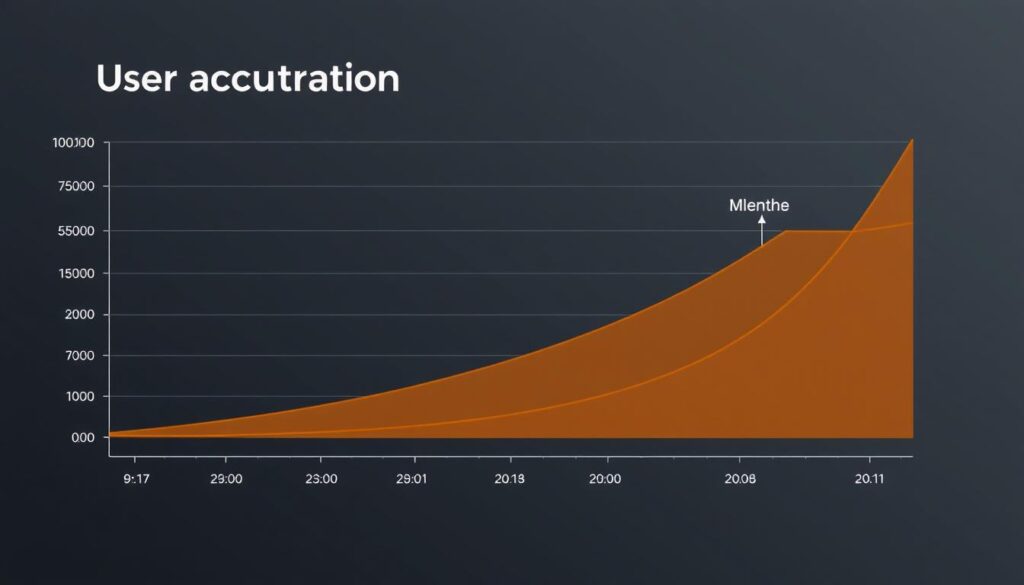

User growth over the 6-month scaling period

User Growth and Retention

- User Growth: From 5,000 to 105,000 active users in 6 months

- User Retention: Improved 30-day retention from 42% to 68%

- Session Duration: Increased average session time from 2.5 to 4.2 minutes

- Feature Adoption: 78% of users now using at least 3 core features (up from 45%)

Performance Improvements

- API Response Time: Reduced from 3.2s to 0.2s average

- Dashboard Load Time: Reduced from 5.1s to 0.8s

- 99th Percentile Response: Improved from 12s to 1.2s

- Error Rate: Reduced from 4.2% to 0.3%

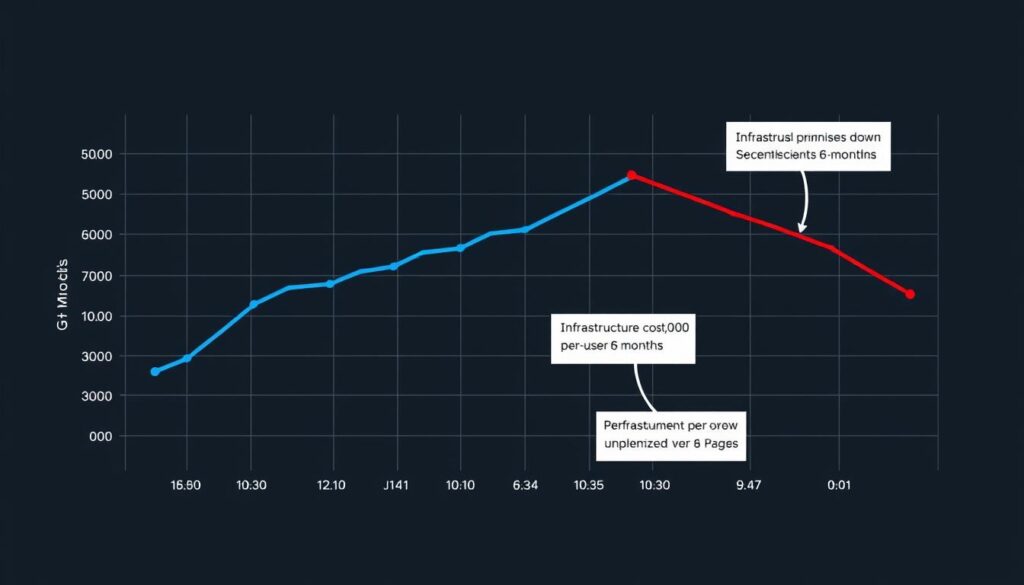

Infrastructure Efficiency

Infrastructure cost per user decreased as user count increased

Want to see similar results for your application?

Our team can help you identify scaling bottlenecks and implement proven solutions.

Tools & Technologies: Our Scaling Tech Stack

The successful scaling of the application relied on a carefully selected set of tools and technologies. Here’s what we used:

Infrastructure & Deployment

- AWS: EC2, RDS, ElastiCache, S3, CloudFront

- Docker: Container management

- Kubernetes: Container orchestration

- Terraform: Infrastructure as code

- GitHub Actions: CI/CD pipeline

Backend & Data

- Node.js: Primary application runtime

- PostgreSQL: Primary database

- Redis: Caching and session management

- RabbitMQ: Message queue for async processing

- Python: Data processing and analytics

Monitoring & Analytics

- Prometheus: Metrics collection

- Grafana: Metrics visualization

- ELK Stack: Log management

- New Relic: Application performance monitoring

- Firebase Analytics: User behavior tracking

Technology Selection Criteria

Our technology choices were guided by several key principles:

What We Prioritized

- Proven scalability with documented success at 100k+ user scale

- Strong community support and active development

- Compatibility with existing team skills to minimize learning curve

- Cost-effectiveness at scale with predictable pricing

- Robust monitoring and debugging capabilities

What We Avoided

- Bleeding-edge technologies without proven production use

- Solutions with licensing costs that scale linearly with users

- Tools with steep learning curves that would slow implementation

- Architectures that would create new single points of failure

- Vendor lock-in that would limit future flexibility

Complete architecture diagram showing integration of all technologies

# Example Kubernetes deployment for the Transaction Service

apiVersion: apps/v1

kind: Deployment

metadata:

name: transaction-service

spec:

replicas: 3

selector:

matchLabels:

app: transaction-service

template:

metadata:

labels:

app: transaction-service

spec:

containers:

- name: transaction-service

image: example/transaction-service:latest

resources:

limits:

cpu: "1"

memory: "1Gi"

requests:

cpu: "0.5"

memory: "512Mi"

env:

- name: NODE_ENV

value: "production"

- name: DB_CONNECTION_STRING

valueFrom:

secretKeyRef:

name: db-secrets

key: connection-string

ports:

- containerPort: 3000

readinessProbe:

httpGet:

path: /health

port: 3000

initialDelaySeconds: 5

periodSeconds: 10Lessons Learned: Key Insights for Scaling Your App

Throughout this six-month scaling journey, we gained valuable insights that can help other teams successfully scale their applications. Here are the most important lessons we learned:

1. Database Optimization Comes First

The single most impactful change was optimizing database queries and implementing proper indexing. No amount of application-level optimization can compensate for a poorly performing database. Start by identifying your most expensive queries and optimize them before adding more infrastructure.

Actionable Tip: Run EXPLAIN ANALYZE on your slowest queries and focus on those that scan large tables without using indexes.

2. Caching Strategy Is Critical

A well-implemented caching strategy can reduce database load by 80%+ while dramatically improving user experience. However, cache invalidation must be carefully designed to prevent stale data. We found that a combination of time-based expiration and event-based invalidation worked best.

Actionable Tip: Start with caching your most frequently accessed, rarely-changing data (like user profiles and configuration settings).

3. Microservices Aren’t Always the Answer

While breaking up our monolith improved scalability, we learned that not everything needs to be a separate service. We initially over-fragmented the application, creating unnecessary complexity. We later consolidated some services that had high communication overhead.

Actionable Tip: Only extract services that have clear boundaries and independent scaling needs.

4. Monitoring Is Non-Negotiable

Comprehensive monitoring was essential for identifying bottlenecks and verifying improvements. Without proper observability, we would have been flying blind. The investment in setting up Prometheus, Grafana, and log aggregation paid off many times over.

Actionable Tip: Implement monitoring before making major changes, not after problems occur.

5. User Experience Can’t Be Sacrificed

Technical optimizations alone weren’t enough. The UX improvements—particularly progressive loading and perceived performance enhancements—were crucial for maintaining user satisfaction during growth. Users are often more sensitive to consistent performance than raw speed.

Actionable Tip: Implement skeleton screens and optimistic UI updates to make your app feel faster, even when it’s processing data.

6. Automate Everything Possible

As we scaled, manual processes became bottlenecks and sources of errors. Investing in automation—from deployment to testing to monitoring—was essential for maintaining quality and speed as complexity increased.

Actionable Tip: Start with automating your most frequent and error-prone tasks, then gradually expand your CI/CD pipeline.

“The most important lesson was that scaling isn’t just a technical challenge—it’s about creating systems that can evolve and adapt as your user base grows. The solutions that work at 10,000 users might not work at 100,000, and what works at 100,000 might not work at 1 million.”

FAQ: Common Questions About App Scaling

How do I know if my app needs to be scaled?

Look for these warning signs:

- Increasing response times during peak usage

- Database CPU consistently above 70%

- Memory usage steadily climbing

- Error rates increasing with user growth

- User complaints about performance

If you’re experiencing two or more of these symptoms, it’s time to start planning your scaling strategy.

How much does it typically cost to scale an app to 100k users?

The cost varies widely depending on your application’s complexity, current architecture, and efficiency. For a moderately complex application, infrastructure costs typically range from ,000-,000/month at the 100k user level. Engineering costs for implementing scaling solutions can range from ,000-0,000 depending on the extent of refactoring needed.

However, these costs should be weighed against the revenue potential of supporting 100k users and the cost of lost users due to performance issues.

Should I use serverless architecture to scale my app?

Serverless architecture can be excellent for scaling certain workloads, particularly those with variable or unpredictable traffic patterns. It eliminates the need to provision and manage servers while providing automatic scaling.

However, serverless isn’t ideal for all applications. Long-running processes, applications with predictable high traffic, or those with specific performance requirements may be better suited to container-based or traditional architectures. Serverless can also become expensive at scale for certain workload patterns.

We recommend a hybrid approach for most applications, using serverless for appropriate components while maintaining traditional architecture for others.

How long does it typically take to scale an app from 5k to 100k users?

In our experience, a comprehensive scaling project typically takes 4-8 months, depending on the application’s complexity and the current state of the architecture. Our case study timeline of 6 months is fairly typical.

The process can be accelerated if the application was well-designed from the beginning with scalability in mind. Conversely, applications with significant technical debt or architectural issues may take longer to scale effectively.

Do I need to rewrite my app to scale it effectively?

A complete rewrite is rarely necessary or advisable. Most applications can be scaled through strategic refactoring, optimization, and infrastructure improvements. In our case study, we maintained the core application while gradually improving its components.

That said, certain components may need significant refactoring if they were not designed with scalability in mind. The key is to identify the specific bottlenecks and address them incrementally rather than attempting a high-risk complete rewrite.

How can I get help with scaling my application?

If you’re facing scaling challenges with your application, our team of experts can help. We offer:

- Comprehensive scaling assessments to identify bottlenecks

- Custom scaling strategies tailored to your specific application

- Implementation support from experienced engineers

- Ongoing optimization and monitoring

Contact us to schedule a free initial consultation where we can discuss your specific challenges and how we might help.

Conclusion: From 5k to 100k and Beyond

Scaling an application from 5,000 to 100,000 users is a significant achievement that requires a strategic approach to both technical architecture and user experience. In this case study, we’ve shown how a combination of database optimization, effective caching, microservices architecture, modern infrastructure, and UX improvements enabled our client’s fintech app to grow exponentially while actually reducing costs.

The key to successful scaling isn’t just adding more servers—it’s about building systems that can evolve efficiently as your user base grows. By addressing bottlenecks methodically and implementing the right technologies at the right time, we created a foundation that will support continued growth well beyond 100,000 users.

“What impressed us most wasn’t just reaching 100,000 users—it was how the application performance actually improved as we grew. We now have the confidence to scale to millions of users without rebuilding our core systems.”

Whether you’re currently at 1,000 users or 50,000, the principles outlined in this case study can help you build a more scalable, resilient application. The journey to scale isn’t always easy, but with the right approach, it’s certainly achievable.

Ready to scale your application?

Our team of scaling experts can help you identify bottlenecks and implement proven solutions.