Python has changed the game in data science. It’s a powerful tool that makes data work easier. With Python, you can handle everything from data cleaning to machine learning.

This article will teach you the basics of using Python for data science. You’ll learn how to create amazing data-driven apps. It’s perfect for both newbies and experienced data analysts.

Table of Contents

Key Takeaways

- Understand the fundamental concepts and libraries of Python for data science

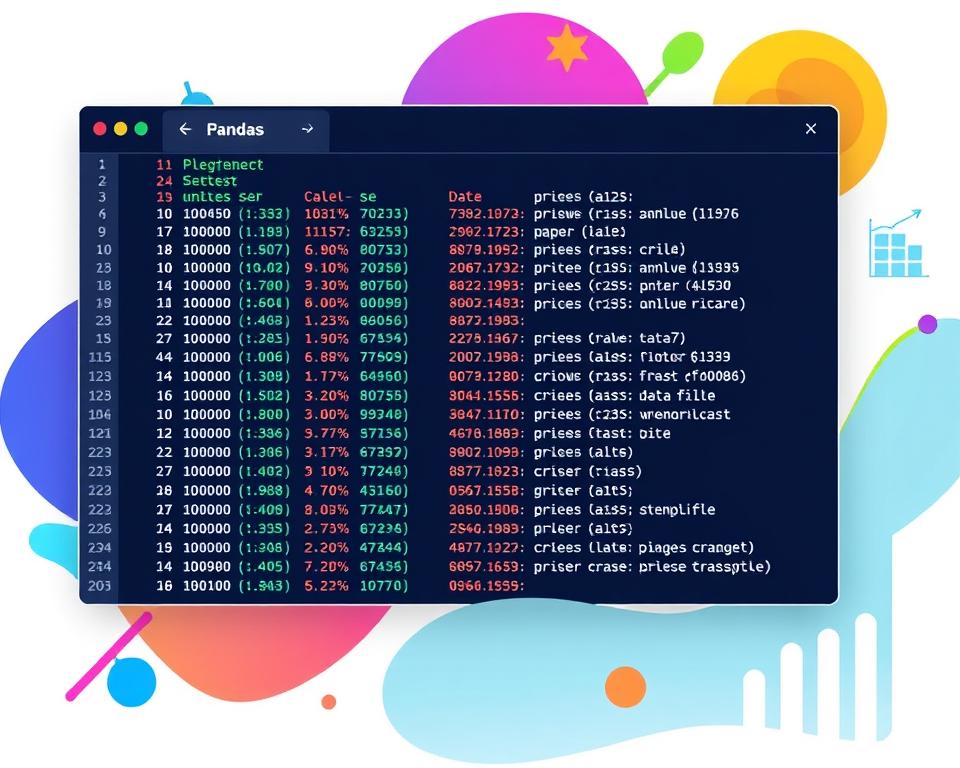

- Explore techniques for data manipulation and analysis using Pandas

- Discover the power of data visualization with Matplotlib and Seaborn

- Integrate machine learning models with Scikit-learn

- Build web-based data applications using the Flask framework

- Leverage NumPy for advanced mathematical and statistical computations

- Implement real-time data processing and streaming techniques

Getting Started with Python for Data Science: Essential Tools and Setup

Starting your data science journey with Python means setting up the right tools. We’ll show you how to get your Python ready and introduce key libraries for data science.

Installing Python and Key Data Science Libraries

The base of your data science work is Python. First, download the latest Python from the official site and install it. This gives you the basics to start with Python data science tools.

Then, install the main data science libraries. You’ll need NumPy for numbers, Pandas for data work, and Matplotlib for visuals. Use pip, Python’s package manager, which comes with Python.

Setting Up Your Development Environment

For easier data science projects, use an IDE like Jupyter Notebook or Spyder. They offer a friendly interface, editing, and link up with data science libraries.

Or, try cloud platforms like Google Colab or Kaggle Notebooks. They give a ready setup and skip the need for local software.

Understanding Package Management with pip

The Python Package Index (PyPI) holds all Python packages. Pip, the installer, lets you install, update, and manage packages from the command line. It’s easy to add new tools and dependencies to your projects.

By doing these steps, you’ll be set for Python in data science. With the right tools and setup, you’re ready to create powerful, data-driven apps.

Fundamentals of Data Manipulation with Pandas

Exploring the Pandas library is crucial for data science projects. It’s Python’s top tool for handling data. Pandas makes working with structured data easy, helping you clean, transform, and analyze data.

The DataFrame and Series are at the core of Pandas. The DataFrame is like a spreadsheet, and the Series is like a column. These tools let you filter, sort, and merge data, making Pandas essential for data work.

- Exploring the Pandas DataFrame: Learn to create, access, and manipulate DataFrames. Use its indexing and slicing to get the most out of your data.

- Working with Pandas Series: Use Series for precise operations on columns or rows. This lets you refine and transform your data accurately.

- Cleaning and Preprocessing Data: Find out how to deal with missing values, remove duplicates, and standardize data. This prepares your data for analysis.

- Filtering and Selecting Data: Discover advanced filtering techniques in Pandas. Use them to get specific data subsets, focusing your analysis.

- Performing Data Transformations: Learn about Pandas’ data transformation functions. From simple math to complex reshaping and aggregation, there’s a lot to explore.

Mastering Pandas basics will unlock data manipulation power in your Python projects. Use this library’s flexibility and efficiency to create valuable data insights.

“Pandas is the foundation of the Python data science ecosystem, providing powerful and flexible data structures, data analysis tools, and data visualization capabilities.”

Data Visualization Techniques Using Matplotlib and Seaborn

Data visualization is key for data scientists and analysts. We’ll look at Matplotlib and Seaborn, tools for making charts and plots that tell stories with data.

Creating Basic Plots and Charts

We’ll start with Matplotlib basics. It’s great for making line plots, scatter plots, and more. Learning these basics helps you share data insights quickly.

Advanced Visualization Methods

Next, we’ll dive into Seaborn’s advanced plots. It has heatmaps, violin plots, and more. These help find complex data patterns, leading to deeper insights.

Interactive Visualizations

Finally, we’ll explore interactive plots. Tools like Plotly and Bokeh make web-based plots interactive. These plots let users zoom, pan, and filter data, enhancing the user experience.

By the end, you’ll know how to use data visualization, Matplotlib, and Seaborn to make charts and plots. These will effectively share data insights.

| Library | Description | Key Features |

|---|---|---|

| Matplotlib | A comprehensive library for creating static, publication-quality charts and plots. |

|

| Seaborn | A high-level data visualization library built on top of Matplotlib, providing more advanced data visualization techniques. |

|

Machine Learning Integration with Scikit-learn

In data science, adding machine learning algorithms is key. Scikit-learn, a top Python library, makes this easy. It helps with predictive modeling, classification, and regression tasks.

Scikit-learn is versatile. It has many algorithms, from simple to complex. This lets data scientists pick the best model for their problem.

Its easy API and clear documentation make it simple to use. You can build, train, and improve models quickly in Python.

Scikit-learn is great for both new and experienced data scientists. It helps unlock insights and drive smart decisions. This leads to innovative solutions for your audience’s needs.

“Scikit-learn is the most user-friendly and efficient open-source machine learning library for Python.”

Building Web-Based Data Applications with Flask

In data science, turning insights into web apps is key. Flask, a lightweight Python tool, is perfect for this. It lets you build RESTful APIs and handle data requests, making your analysis interactive and user-friendly.

Setting Up Flask Framework

Starting with Flask is easy. First, install it with pip. Then, create a basic app structure, define routes, and handle HTTP requests. Flask’s simplicity helps you focus on your app’s core, without extra complexity.

Creating RESTful APIs

Flask is great for making RESTful APIs. You define routes and map them to Python functions. This exposes your data analysis as a web service, making it easy to integrate with other systems.

Handling Data Requests

Flask makes handling data requests simple. It supports various data types, like form fields and JSON payloads. This lets you efficiently process and respond to user interactions, enhancing your app’s interactivity.

| Flask Feature | Description |

|---|---|

| Lightweight and Flexible | Flask is a minimalist web framework, allowing you to focus on the core functionality of your data-driven web application without the overhead of a more complex framework. |

| RESTful API Development | Flask simplifies the creation of RESTful APIs, making it easy to expose your data analysis and processing capabilities as a web service. |

| Efficient Data Request Handling | Flask provides a straightforward way to manage incoming data requests, enabling you to process and respond to user interactions in your data-driven web application. |

Flask turns your data science projects into web apps that work well with other systems. It’s great for data visualization, predictive analytics, or custom business intelligence solutions. Flask makes your data-driven ideas come to life efficiently.

Database Integration and Management

Data science projects are getting more complex. This means we need better ways to store and get data. Python has many libraries that make working with databases easy, both SQL and NoSQL. This guide will help you add database management to your Python data apps.

Exploring SQL and NoSQL Databases

SQL databases, like PostgreSQL and MySQL, are great for structured data. They keep data organized with schemas and relationships. NoSQL databases, such as MongoDB and Cassandra, are better for unstructured data. They offer flexibility and grow well with big data.

| Database Type | Strengths | Use Cases |

|---|---|---|

| SQL | Data integrity, complex querying, transactions | Accounting systems, e-commerce platforms, financial applications |

| NoSQL | Scalability, flexibility, handling unstructured data | Real-time analytics, content management systems, IoT data processing |

Adding these databases to your Python projects lets you use their strengths. This ensures your apps can handle big data needs.

Knowing the strengths and uses of SQL and NoSQL databases helps you choose the right one. This is key for database integration, data storage, or data retrieval in your projects.

Statistical Analysis and Mathematical Computing with NumPy

Python has become a key tool in data science, thanks to NumPy. This library is crucial for stats and math. It makes complex tasks easy with its array operations and linear algebra.

Array Operations and Calculations

NumPy’s core is the ndarray, a multi-dimensional array. It’s perfect for big data. You can do lots of operations on it, like arithmetic and stats.

| NumPy Function | Description |

|---|---|

| np.add() | Performs element-wise addition |

| np.subtract() | Performs element-wise subtraction |

| np.multiply() | Performs element-wise multiplication |

| np.divide() | Performs element-wise division |

Mathematical Functions and Linear Algebra

NumPy also has many math functions. You can do trig, exp, log, and stats with ease. This is great for advanced analysis and machine learning.

Its linear algebra module is also impressive. You can multiply, invert, and decompose matrices. This is key for working with big data and building models.

“NumPy is the fundamental package for scientific computing in Python. It is a powerful N-dimensional array object, and it has a large collection of high-level mathematical functions to operate on these arrays.” – NumPy website

Real-Time Data Processing and Streaming

In today’s fast world, handling data quickly is key. Python is great at this, thanks to its strong data handling. Apache Kafka is a big help here, making data flow fast and smooth.

Real-time data work means dealing with data as it comes in. This is super useful for things like catching fraud fast or tracking stock prices. Python and Apache Kafka help make apps that handle real-time data well.

The Power of Apache Kafka

Apache Kafka is a top choice for data streaming. It’s built to handle lots of data quickly and reliably. It’s perfect for data processing in Python apps. Its strong features make it great for fast data pipelines.

- Efficient data ingestion and storage

- Scalable and fault-tolerant architecture

- Seamless integration with Python and other data processing tools

- Reliable and high-throughput data transmission

Using Apache Kafka and Python, developers can make apps that work with real-time data fast and accurately.

“The ability to process data in real-time is transforming industries and unlocking new opportunities for innovation.”

Python for Data Science: Building Data-Driven Apps

In the world of data science, making end-to-end data-driven apps is key. Python is a top choice for this, thanks to its vast library of tools. This section covers the basics of app architecture, data pipelines, and deployment.

Application Architecture Design

Building a solid app architecture is crucial. It means breaking down the app into parts, making it modular and scalable. Developers must align the app’s logic, data flow, and user interface for a smooth experience.

Data Pipeline Implementation

Data-driven apps need a smooth data flow from start to finish. This includes data ingestion, transformation, analysis, and visualization. Using Python’s libraries like Pandas and Apache Spark, we’ll look at how to build reliable and scalable data pipelines.

Deployment Strategies

Deploying your app effectively is the last step. This includes using containers, cloud hosting, and CI/CD workflows. Modern strategies ensure your app is scalable, available, and adaptable to changes.

In this section, we’ll explore how to implement these concepts with Python. You’ll learn to build impactful data-driven apps. With these skills, you’ll be ready to create innovative solutions.

| Component | Description | Key Considerations |

|---|---|---|

| Application Architecture | The overall structure and organization of the data-driven application |

|

| Data Pipelines | The seamless flow of data from ingestion to analysis and visualization |

|

| Deployment Strategies | The methods and techniques used to deploy the data-driven application |

|

Performance Optimization and Scaling

As data science projects with Python get bigger, making them run faster and handle more data is key. This part talks about performance optimization, scaling, parallel processing, and distributed computing. These strategies help your data apps do well under pressure.

First, find and fix slow spots in your code. Use tools to see where your app is using too much or too little. Then, use parallel processing to make your app work faster by using more than one CPU core at once.

Distributed computing is a big help for big data projects. Tools like Apache Spark or Dask let you spread out your work on many machines. This makes handling huge amounts of data easier and makes your system more reliable.

- Find and fix slow parts of your code

- Use parallel processing to speed up your app

- Try distributed computing for big data tasks

- Keep checking and improving your app’s speed

Using these methods in your data science work makes sure your Python apps can meet today’s data needs. They will run fast and grow with your data.

Error Handling and Debugging Techniques

Exploring Python data science is exciting but also challenging. You’ll face errors while working with data and developing applications. Learning how to handle and debug these errors is key to making your data-driven apps reliable.

Common Data Processing Errors

There are many types of data processing errors. These include syntax mistakes, type mismatches, missing data, and logical problems. Here are some common ones:

- Syntax errors: Wrong Python syntax that stops the code from running.

- Type errors: Trying to use data types that don’t match for certain operations.

- IndexError: Trying to access data outside its allowed range in lists or arrays.

- ValueError: Giving a wrong value to a function or operation.

- FileNotFoundError: Trying to open a file that doesn’t exist or can’t be found.

Debugging Strategies

Good debugging is essential for finding and fixing these errors. Here are some effective ways to debug Python data science code:

- Print Statements: Use print statements to see how your code is running and find where problems might be.

- Logging: Set up a logging system to get detailed info about your program’s actions. This helps track down errors.

- Debugger Tools: Use tools like the Python Debugger (pdb) or IDE debuggers to go through your code step by step and check variables.

- Unit Testing: Write detailed unit tests to check if each part of your app works right. This catches errors early.

By learning how to handle and debug errors, you can make strong, dependable apps. Remember, every error is a chance to get better at being a Python data scientist.

| Error Type | Description | Example |

|---|---|---|

| Syntax Error | Incorrect Python syntax that prevents the code from executing | Forgetting a colon at the end of a function definition |

| Type Error | Attempting to perform operations on incompatible data types | Trying to add a string and an integer |

| IndexError | Accessing an index outside the bounds of a list, array, or other data structure | Trying to access an element in a list that doesn’t exist |

| ValueError | Passing an invalid value to a function or operation | Providing a non-numeric value to a function that expects a number |

| FileNotFoundError | Attempting to access a file that does not exist or is not accessible | Trying to open a file that doesn’t exist in the specified location |

Testing and Quality Assurance

Creating strong and dependable data-driven apps needs a detailed approach to testing and quality checks. In Python for data science, unit testing and integration testing are key. They help make sure your code and data processing are accurate.

Unit testing checks each part of your Python code separately. It makes sure each piece works as it should. This catches bugs early and keeps your data work running smoothly. Adding unit tests to your project makes a solid base for your app.

Integration testing looks at how different parts of your app work together. It tests how data moves and interacts between sources, processing, and interfaces. This thorough testing improves your app’s quality and data checks.